Digital Diagnosis Without Permission: AI, Health Data, and Colonial Echoes

by Ramya Nadig

Introduction

Artificial Intelligence (AI) is playing an increasingly influential role in transforming health care systems globally. Its applications range from early cancer detection through medical imaging to the use of conversational agents offering guidance on maternal health.

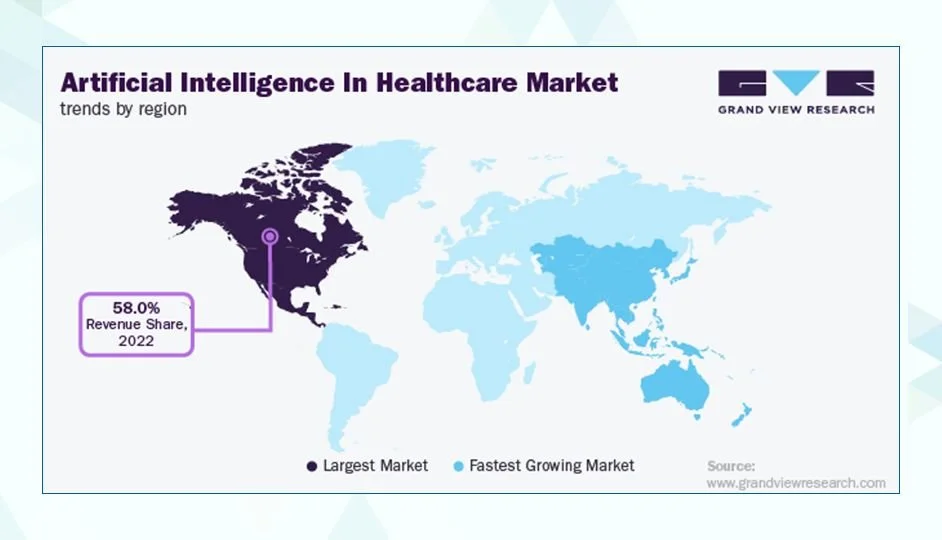

Figure: Artificial Intelligence in health care Market by Region (2022). North America holds the largest revenue share (58%), while Asia-Pacific is the fastest-growing market. Source: Grand View Research, via Radixweb.

These technologies are often celebrated for their ability to improve efficiency, expand access, and compensate for resource shortages, particularly in low- and middle-income countries (LMICs), where infrastructure and specialist expertise can be limited.

However, this enthusiasm often obscures a deeper ethical concern: much of the data that powers AI systems is collected in settings where patients may not even know it is being used. In many cases, individuals are unaware that their personal health data is feeding global AI models, or that clinical decisions about their care may be influenced not by a doctor, but by an opaque algorithm.

This raises a fundamental question: What does informed consent truly mean in the age of AI?

This article explores how conventional models of consent fall short in AI-driven health care and argues for a reimagined approach rooted in equity, accountability, and decolonial principles, especially when those most affected are excluded from both the benefits and decision-making processes of these technologies.

The Rise of AI in health care

Artificial Intelligence (AI) is playing an increasingly influential role in transforming health care systems globally. Its applications range from early cancer detection through medical imaging to the use of conversational agents (chatbots) offering maternal health guidance. AI technologies are being integrated into clinical workflows to assist in diagnosing, monitoring, and managing diseases more efficiently.

This trend is particularly salient in low- and middle-income countries (LMICs), where health care systems frequently struggle with limited infrastructure and shortages of trained professionals. In these contexts, AI has been positioned as a potential solution to expand access to care, support clinical decision-making, and alleviate the burden on overstretched health services. For example, AI tools have been piloted for tuberculosis (TB) screening in Peru and India, using chest X-rays and automated image interpretation. In South Africa, digital maternal health platforms such as MomConnect have reached nearly five million users, demonstrating AI's reach and utility at scale

Figure: Step-by-step guide to registering with MomConnect, South Africa’s mobile health programme supporting maternal and newborn care via WhatsApp and SMS (Source: National Department of Health, South Africa).

However, the rapid uptake of AI in health care also raises critical ethical and political concerns. A substantial portion of the data used to develop and train AI systems, such as electronic health records, epidemiological trends, and clinical images, originates from LMICs. In many cases, data collection occurs in contexts with limited regulatory oversight and underdeveloped frameworks for ensuring transparency, accountability, and community engagement. While concerns about vague or insufficiently informed consent are legitimate, especially in large-scale public health programmes and mass data collection efforts, this is not universally the case. Documented initiatives show that explicit consent protocols and in-country ethical review are being applied in some LMICs. In South Africa, the MomConnect maternal health programme has been subject to ethical review in evaluation studies, with protocols for participant consent and confidentiality safeguards. In Vietnam, hospitals such as Da Nang have piloted AI-based diagnostic tools for infectious diseases with institutional review board approval and patient consent procedures in place. Likewise, in India, the Swaasa AI platform for tuberculosis screening was formally registered in the national clinical trials registry, requiring institutional ethics clearance and written informed consent. These cases suggest that, although uneven, frameworks for consent and ethical oversight are emerging within LMIC contexts.

Still, challenges remain. Scholars have critiqued this model as a form of data colonialism, in which data are extracted from LMIC populations to develop technologies that are ultimately owned, commercialised, and valorised by institutions in high-income countries. The benefits, whether economic such as patents and profits, academic such as publications, or technological such as prestige and innovation, tend to accrue in the Global North. Meanwhile, the communities that supplied the data often remain excluded from the resulting innovations. In some instances, they encounter systems that are ill-adapted to their local realities.

Furthermore, AI systems trained on datasets that do not reflect the populations they are applied to may reproduce or even exacerbate existing health disparities. Without localized validation and contextual awareness, algorithmic decisions risk reinforcing harmful biases.

This landscape calls for more than technological optimism. It demands a critical interrogation of power, ownership, and accountability: Who controls the data? Who benefits from the AI systems developed from it? And who bears the risks? As AI increasingly acts as a decision-making agent in health care, sometimes effectively replacing the judgment of a physician, questions of informed consent become both more complex and more urgent.

In short, as AI assumes a larger role in global health, particularly in settings with unequal power dynamics, ethical engagement, data governance, and equitable benefit-sharing must be treated as central concerns rather than peripheral ones.

Consent as a Colonial and Ethical Challenge

Informed consent has long been a core principle of ethical medical practice. Traditionally, it involves patients receiving clear, understandable information about a treatment or procedure, including its purpose, risks, benefits, and alternatives, and then voluntarily agreeing to it. This model is based on the idea of a direct relationship between doctor and patient, where communication is transparent and decisions are made within a defined period of time.

However, this model is increasingly challenged by the growing use of artificial intelligence (AI) in health care. Today, health data is often collected not only for immediate care, but also for future reuse in developing AI systems. This reuse can happen multiple times, often without the patient’s knowledge, and across national borders. As a result, the original purpose of data collection becomes unclear, and patients may not be aware that their information is being used in this way.

This issue is especially relevant in low- and middle-income countries (LMICs), where digital literacy tends to be lower and data protection laws are often weak or poorly enforced. In these settings, individuals may not know that their health data is being used for research or AI development. They often receive no clear explanation, no chance to refuse, and no information about how long their data will be used or who will have access to it. In these situations, consent is no longer an active choice, but something that is done to people without their meaningful involvement.

This is not just a technical problem. It reflects deeper power imbalances in global health and technology. Many experts argue that current digital consent systems focus too narrowly on individual agreements and treat consent as a procedural requirement, rather than a meaningful ethical process. This problem is particularly serious in AI development, where data from LMICs is often collected by institutions and companies based in high-income countries. In many cases, there is little or no local oversight, and the communities providing the data do not share in the benefits. Their data becomes a resource that is extracted, while their voices are excluded.

This creates a troubling pattern. The people whose data help train AI systems are often not informed, not compensated, and not included in decision-making. The systems built with their data are usually developed elsewhere, governed by laws they cannot influence, and shaped by values that may not reflect their needs or priorities. This risk is even greater for Indigenous groups and other historically marginalised populations, who have experienced exploitation in medical research in the past.

These conditions raise important ethical questions.

Can people meaningfully agree to broad or future uses of their data if they are not kept informed or given any control over time?

Is it acceptable to share sensitive health data across borders without input from the communities involved?

And if patients are not aware that AI might influence decisions about their care, is their autonomy truly being respected?

There is an ongoing debate about how to make consent more meaningful in this context.

Figure: Comparison of broad and dynamic consent models highlighting autonomy and adaptability in data use. (Source: ResearchGate (2023)).

Some researchers and policymakers support broad consent models, which allow for general use of data in the future. Others propose dynamic consent systems, which give individuals the ability to update their preferences over time. However, these solutions may not be enough. Unless the deeper inequalities in data governance are addressed, particularly around who controls data and who benefits from it, consent will remain limited in practice.

In the context of AI in health care, meaningful consent requires more than individual agreement. It depends on shared accountability, the recognition of data sovereignty, and active participation by communities in how data is governed. Without these structural changes, consent could become a tool used to justify the continued extraction of data from vulnerable populations, rather than a safeguard that protects their rights and dignity.

Algorithmic “Doctors” and the Illusion of Neutrality

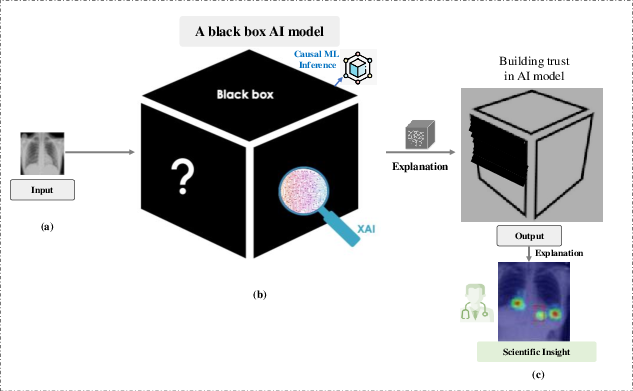

While informed consent is a cornerstone of ethical medical practice, its erosion at the data collection stage reveals how power can be quietly shifted away from patients. Increasingly, this shift deepens with the integration of algorithmic systems into clinical decision-making. Though clinicians remain the final arbiters in patient care, many rely on AI-driven tools for support, tools that can reframe diagnosis and treatment within computational logics that are not always transparent.

The extent to which these tools shape clinical encounters varies. Some institutions adopt AI conservatively, ensuring clinicians maintain interpretive autonomy. Others integrate systems more heavily into workflows, raising the risk of “automation bias,” where clinicians defer to algorithmic output even when it contradicts other clinical cues. This over-reliance has been documented in fields like radiology, pathology, and emergency medicine. While explainable AI (XAI) methods attempt to mitigate such risks, their success depends on how explanations are communicated, the design of user interfaces, and the training clinicians receive to interpret algorithmic insights effectively.

Figure: A visual representation of how clinical AI systems act as "black boxes," where data goes in and decisions come out without clear explainability. The image also highlights how explainable AI can help build trust. (Source: Abhishek Ghosh, TheCustomizeWindows.com (2024))

The ethical challenges extend beyond clinical settings. Data collected during routine care may be repurposed without adequate consent, raising concerns about secondary data use. The UK’s care.data programme is a well-known example. It was ultimately discontinued due to public backlash over inadequate transparency and opt-out mechanisms. Cases like this illustrate how AI in health care can reinforce power asymmetries, particularly when patients are unaware of how their information is being used or shared.

A persistent myth in digital health is that algorithms are inherently objective and unbiased. In practice, algorithms reproduce the assumptions and limitations embedded in their training data. Many clinical models, for instance, have been trained on datasets skewed toward white, urban, or high-income populations. When these models are applied to patients from marginalszed groups, such as Indigenous communities, rural populations, or those in low- and middle-income countries (LMICs), their accuracy often declines. Although evidence of such disparities is mounting, few models undergo rigorous external validation in underrepresented contexts. Initiatives like federated learning for tuberculosis detection in African health systems are promising but remain at an early stage. They also face barriers like uneven internet access and limited technical infrastructure.

Race-based adjustments in clinical algorithms exemplify how structural biases can become embedded in statistical tools. Historically, equations estimating kidney function (e.g., eGFR) included race as a variable, often resulting in delayed referrals for Black patients. Although these adjustments were initially justified to improve statistical fit, they have been widely critiqued for lacking transparency and for perpetuating health inequities. In response, many health systems have adopted race-neutral alternatives, particularly following recommendations from professional bodies like the National Kidney Foundation and the American Society of Nephrology. Similar critiques have emerged in pulmonary function, obstetrics, and cardiology, where race has sometimes been used as a proxy for social or environmental factors that could be measured more directly.

Opacity remains a core challenge in AI deployment. Many machine learning models, especially deep learning systems, function as “black boxes,” offering little insight into how their outputs are derived. This creates a double burden. Patients typically lack the information to understand or contest algorithmic decisions, and clinicians may be equally limited in their interpretive capacity. The regulatory response to this challenge is evolving. For example, the European Union’s AI Act, set to be phased in between 2026 and 2027, includes specific obligations for high-risk systems in health care, including transparency and accountability provisions. However, when harm occurs, responsibility is often distributed across developers, health care institutions, and individual clinicians. Legal and ethical frameworks are still catching up to these dispersed risks.

Beyond technical concerns, clinical algorithms often reflect unexamined cultural assumptions. Many are designed within biomedical paradigms that overlook linguistic diversity, community-based understandings of health, or culturally specific expressions of pain and distress. While theoretical critiques of this issue are well established, empirical studies linking cultural misalignment to specific misdiagnoses remain rare. Nonetheless, these design gaps risk marginalising non-Western knowledge systems and may reinforce historical patterns of exclusion in medicine.

This centralisation of decision-making power, often in the hands of developers, corporations, or distant institutions, can disempower both patients and frontline clinicians. In contrast, emerging models of community-led and culturally grounded AI governance offer more equitable pathways. For example, Canada’s First Nations Information Governance Centre promotes OCAP principles (Ownership, Control, Access, and Possession), enabling Indigenous communities to guide how their data is used. Similarly, Māori-led health initiatives in Aotearoa New Zealand are developing participatory AI design frameworks rooted in self-determination and cultural relevance.

Ultimately, advancing AI in health care without rethinking its design, implementation, and oversight risks reproducing existing injustices. Equity-focused approaches must center transparency, shared accountability, and cultural responsiveness. These principles are beginning to take shape in both policy and practice.

What Real, Decolonial Consent Could Look Like

As AI systems increasingly shape health care, they often do so in ways that obscure decision-making and reduce patient agency. Traditional informed consent, where patients sign a form once and then entrust opaque systems with their data and care, is proving inadequate in this context. Scholars and practitioners alike argue that meaningful consent must evolve to meet the ethical demands of AI-driven medicine. A decolonial approach offers one such evolution: a vision of consent that is not just individualised and transactional, but collective, continuous, and grounded in justice.

One key alternative is community-based data governance. Rather than treating data as a resource to be extracted and managed by distant institutions, this model centers the authority and values of the communities that the data describe. In Indigenous contexts, for instance, frameworks like the OCAP Principles (Ownership, Control, Access, and Possession) assert collective rights over data relating to Indigenous people, land, and knowledge. Similarly, the CARE Principles (Collective Benefit, Authority to Control, Responsibility, and Ethics) emphasise relational accountability and ethical use of data. These frameworks reflect a view of data not just as information, but as an extension of cultural identity and collective wellbeing. It is important to note, however, that such principles are not implemented uniformly across Indigenous nations or health care systems.

At broader national and regional levels, the idea of data sovereignty is gaining traction, especially in the Global South. Though the term itself may not always be used, its core principles of ethical control, local ownership, and community benefit are appearing in a range of digital governance strategies. The African Union’s digital policy agenda, for example, promotes ethical, people-centered technological development. In Ghana, national initiatives have referenced sovereign and ethical data use, especially through consultations and draft frameworks. However, finalised public policies explicitly articulating “data sovereignty” remain limited. Malawi is also in the early stages of developing AI and data governance mechanisms, often through workshops and planning sessions rather than codified regulation.

These developments point to growing momentum around community-led approaches to data governance. Regional networks, civil society groups, and international organizations including the UNDP, the Health Data Collaborative, and Last Mile Health are actively supporting models that prioritise equity, transparency, and public accountability in health data systems.

For these principles to translate into practice, AI systems must become more transparent and explainable. Current black-box models undermine consent by making it difficult, sometimes impossible, for patients or even clinicians to understand how algorithmic decisions are made. Research highlights the need for tools that can demystify these systems. Techniques such as plain-language summaries, visual aids, and real-time feedback mechanisms can help patients better understand and contest AI recommendations. In some genomic research settings, especially in Indigenous-led pilot projects in Australia and North America, tools like layered consent forms or interactive dashboards have been tested. However, these remain early-stage experiments. Few clinical implementations are well documented, and large-scale adoption is still rare.

Equally crucial is the role of participatory design. Communities should not merely be asked to consent to pre-made tools; they should be directly involved in shaping those tools from the beginning. Scoping reviews of participatory AI development in health care show that co-design with local stakeholders leads to technologies that are more relevant, trusted, and ethically robust.

There are promising examples around the world. The African Union’s digital transformation strategy explicitly promotes people-centered and ethically guided AI development. In Canada, Indigenous-led health data governance initiatives are advancing frameworks like OCAP and CARE, though the extent and form of implementation vary by community. In South and East Asia, projects such as village-level consent models in India or community-based maternal health data efforts in Nepal are beginning to explore locally grounded approaches. Much of this work is still in early phases, and public documentation remains limited.

In this reimagined model, consent is no longer a checkbox or one-time signature. It becomes a sustained process of shared governance. It acknowledges that data is not neutral, that technology carries political weight, and that ethical AI must be co-created with communities, not imposed upon them.

Conclusion

AI in health care holds undeniable potential, but without informed and meaningful consent, it risks becoming another frontier of digital colonialism. The reuse of health data without transparency, particularly in LMICs, reflects broader patterns of historical exploitation, where data is extracted, value is created elsewhere, and communities are left with little say in how their information is used or governed.

This is not just a technical oversight. It is an ethical and political failure. Real consent in the context of AI must go beyond a checkbox. It must be continuous, relational, and grounded in the specific contexts and needs of those whose data is being collected. Models of community governance, participatory design, and Indigenous data sovereignty offer viable and necessary pathways toward more just and equitable practices.

For AI to serve public health equitably, researchers, developers, and policymakers must confront the systems of power that shape how consent is imagined and implemented. Ethical AI must be built not only for efficiency or innovation, but also on foundations of trust, transparency, and shared control. Anything less risks reinforcing the very inequities that health care and technology should be working to dismantle.